UN talks in Geneva aim to stop 'killer robots'

Armies of Terminator-like warriors fan out across the battlefield, destroying everything in their path, as swarms of fellow robots rain fire from the skies.

That dark vision could all too easily shift from science fiction to fact with disastrous consequences for the human race, unless such weapons are banned before they leap from the drawing board to the arsenal, campaigners warn.

On Tuesday, governments begin holding the first-ever talks focussed exclusively on so-called "lethal autonomous robots".

The four-day session of the UN Convention on Conventional Weapons in Geneva could chart the path towards preventing the nightmare scenario evoked by opponents, ahead of a fresh session in November.

"Killer robots would threaten the most fundamental of rights and principles in international law," warned Steve Goose, arms division director at Human Rights Watch.

"We don't see how these inanimate machines could understand or respect the value of life, yet they would have the power to determine when to take it away," he told reporters on the eve of the talks.

"The only answer is a preemptive ban on fully autonomous weapons," he added.

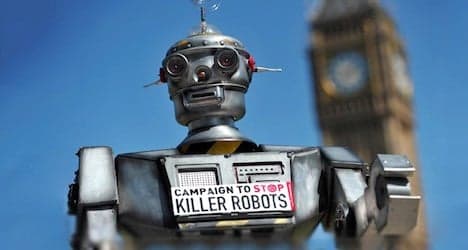

Goose's organization came together with a host of others to form the Campaign To Stop Killer Robots in April 2013, prodding nations into action.

Robot weapons are already deployed around the globe.

The best-known are drones, unmanned aircraft whose human controllers push the trigger from a far-distant base. Controversy rages, especially over the civilian collateral damage caused when the United States strikes alleged Islamist militants.

Perhaps closest to the Terminator-type killing machine portrayed in Arnold Schwarzenegger's action films is a Samsung sentry robot used in South Korea, with the ability to spot unusual activity, talk to intruders and, when authorized by a human controller, shoot them.

Then there is the Phalanx gun system, deployed on US Navy ships, which can search for enemy fire and destroy incoming projectiles all by itself, or the X47B, a plane-sized drone able to take off and land on aircraft carriers without a pilot and even refuel in the air.

Other countries on the cutting edge include Britain, Israel, China, Russia and Taiwan.

But it's the next step, the power to kill without a human handler, that rattles opponents of lethal autonomous robots the most.

"Checking the legitimacy of targets and determining proportional response requires deliberative reasoning," said Noel Sharkey, emeritus professor of robotics and artificial intelligence at Britain's University of Sheffield.

Experts predict that military research could produce such killers within 20 years, leaving current systems looking as obsolete as steam engines.

Power of life and death

Supporters of robot weapons say they offer life-saving potential in warfare, being able to get closer than troops to assess a threat properly, without tiring, becoming frightened or letting emotion cloud their decision-making.

But that is precisely what worries their critics.

"It's totally unconscionable that human beings think that it's OK to cede the of power and life over other humans to machinery," said Jody Williams, who won the 1997 Nobel Peace Prize for her campaign for a land-mine ban treaty.

"If we don't inject a moral and ethical discussion into this, we won't control warfare," she said.

In November 2012, Washington imposed a 10-year human-control requirement for robot weapons, welcomed by campaigners as a first step.

Britain has said that existing arms control rules are sufficient to stem the risks.

But opponents say a specific treaty is essential because unclear ground rules could leave dangerous loopholes, not only for their use in warfare but also in policing.

"We would like this week to begin to build a consensus around the concept that there must always be meaningful human control over targeting and kill decisions, on the battlefield and in law enforcement situations," said Goose.

"Trying to rely on existing international humanitarian or human rights law and perhaps some concept of best principles simply will not suffice to ensure that these weapons are never developed, never produced, never used," he said.

With robotics research also being deployed for fire-fighting and bomb disposal, Sharkey underlined that the objection is not to autonomy per se.

"I have a robot vacuum cleaner at home, it's fully autonomous and I do not want it stopped," he said.

"There is just one thing that we don't want, and that's what we call the kill function."

Comments

See Also

That dark vision could all too easily shift from science fiction to fact with disastrous consequences for the human race, unless such weapons are banned before they leap from the drawing board to the arsenal, campaigners warn.

On Tuesday, governments begin holding the first-ever talks focussed exclusively on so-called "lethal autonomous robots".

The four-day session of the UN Convention on Conventional Weapons in Geneva could chart the path towards preventing the nightmare scenario evoked by opponents, ahead of a fresh session in November.

"Killer robots would threaten the most fundamental of rights and principles in international law," warned Steve Goose, arms division director at Human Rights Watch.

"We don't see how these inanimate machines could understand or respect the value of life, yet they would have the power to determine when to take it away," he told reporters on the eve of the talks.

"The only answer is a preemptive ban on fully autonomous weapons," he added.

Goose's organization came together with a host of others to form the Campaign To Stop Killer Robots in April 2013, prodding nations into action.

Robot weapons are already deployed around the globe.

The best-known are drones, unmanned aircraft whose human controllers push the trigger from a far-distant base. Controversy rages, especially over the civilian collateral damage caused when the United States strikes alleged Islamist militants.

Perhaps closest to the Terminator-type killing machine portrayed in Arnold Schwarzenegger's action films is a Samsung sentry robot used in South Korea, with the ability to spot unusual activity, talk to intruders and, when authorized by a human controller, shoot them.

Then there is the Phalanx gun system, deployed on US Navy ships, which can search for enemy fire and destroy incoming projectiles all by itself, or the X47B, a plane-sized drone able to take off and land on aircraft carriers without a pilot and even refuel in the air.

Other countries on the cutting edge include Britain, Israel, China, Russia and Taiwan.

But it's the next step, the power to kill without a human handler, that rattles opponents of lethal autonomous robots the most.

"Checking the legitimacy of targets and determining proportional response requires deliberative reasoning," said Noel Sharkey, emeritus professor of robotics and artificial intelligence at Britain's University of Sheffield.

Experts predict that military research could produce such killers within 20 years, leaving current systems looking as obsolete as steam engines.

Power of life and death

Supporters of robot weapons say they offer life-saving potential in warfare, being able to get closer than troops to assess a threat properly, without tiring, becoming frightened or letting emotion cloud their decision-making.

But that is precisely what worries their critics.

"It's totally unconscionable that human beings think that it's OK to cede the of power and life over other humans to machinery," said Jody Williams, who won the 1997 Nobel Peace Prize for her campaign for a land-mine ban treaty.

"If we don't inject a moral and ethical discussion into this, we won't control warfare," she said.

In November 2012, Washington imposed a 10-year human-control requirement for robot weapons, welcomed by campaigners as a first step.

Britain has said that existing arms control rules are sufficient to stem the risks.

But opponents say a specific treaty is essential because unclear ground rules could leave dangerous loopholes, not only for their use in warfare but also in policing.

"We would like this week to begin to build a consensus around the concept that there must always be meaningful human control over targeting and kill decisions, on the battlefield and in law enforcement situations," said Goose.

"Trying to rely on existing international humanitarian or human rights law and perhaps some concept of best principles simply will not suffice to ensure that these weapons are never developed, never produced, never used," he said.

With robotics research also being deployed for fire-fighting and bomb disposal, Sharkey underlined that the objection is not to autonomy per se.

"I have a robot vacuum cleaner at home, it's fully autonomous and I do not want it stopped," he said.

"There is just one thing that we don't want, and that's what we call the kill function."

Join the conversation in our comments section below. Share your own views and experience and if you have a question or suggestion for our journalists then email us at [email protected].

Please keep comments civil, constructive and on topic – and make sure to read our terms of use before getting involved.

Please log in here to leave a comment.